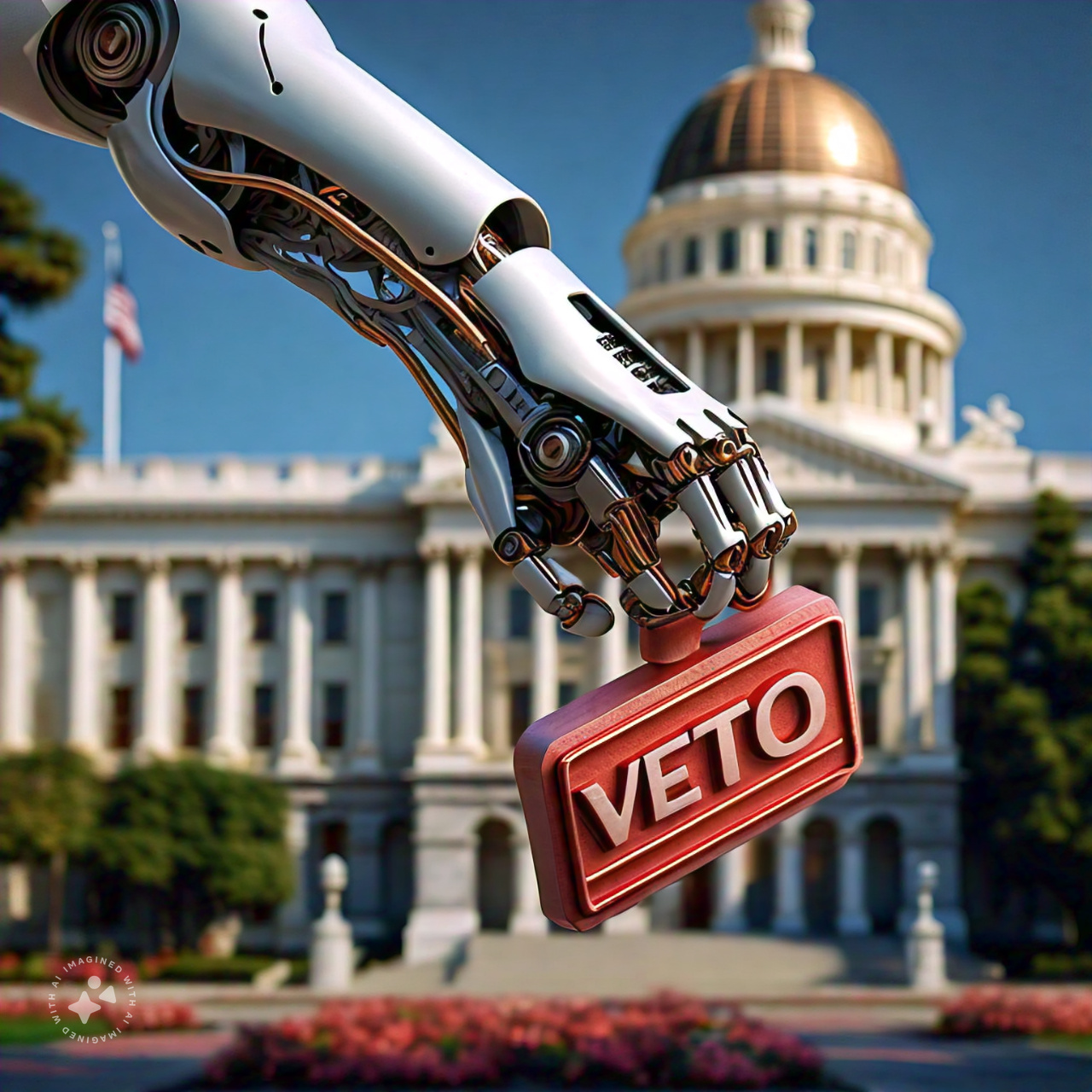

California Governor Gavin Newsom has vetoed a contentious AI safety bill, citing concerns that it could hinder innovation and drive AI companies out of the state. The bill aimed to establish stringent standards for AI systems, particularly those deployed in high-risk environments or involving critical decision-making. However, Newsom argued that the bill did not take into account the varying levels of risk associated with different AI systems.

Key Points:

- Veto Rationale: Newsom stated that the bill would apply “stringent standards to even the most basic functions” and could stifle innovation in the AI industry.

- Alternative Approach: Newsom has asked experts to help develop “workable guardrails” for AI, focusing on empirical, science-based trajectory analysis.

- Risk Assessment: State agencies have been ordered to expand their assessment of risks from potential catastrophic events tied to AI use.

- Industry Reaction: The bill’s author, Democratic State Senator Scott Wiener, argued that the veto makes California less safe, as companies creating powerful AI technology will face no binding restrictions.

- Future Plans: Newsom has expressed willingness to work with the legislature on AI legislation during its next session.

National Context:

The veto comes as legislation in the U.S. Congress to set safeguards for AI has stalled, and the Biden administration is advancing regulatory AI oversight proposals. The White House has emphasized the need for responsible AI development, issuing an executive order that outlines principles for safe, secure, and trustworthy AI use \¹. The order highlights the importance of mitigating risks associated with AI, including those related to national security, equity, and civil rights.